A/B Testing Best Practices: Win the Conversion Game With Data

Most founders make conversion decisions based on gut feeling, leaving money on the table. A/B testing replaces guessing with data, run 20 tests, win 15 with small improvements, and watch your conversion rate compound from 2% to 6%. Stop guessing. Start testing.

You are looking at your figures on your dashboard and you need to make a decision. Your headline says "Start Your Free Trial." However, you are thinking if "Get Started Now" would convert better? Or maybe "Try Risk-Free for 14 Days"?

You have no clue. So you make a random choice.

This is the problem that most founders struggle with. They decide critical conversion changes by their intuition, personal preference, or what "feels right." And, because of this, they are leaving huge amounts of money untapped.

The difference between founders who are winning and those who are struggling is not luck. It is not better copywriting or fancier design. The winners are the ones who stopped guessing and started testing. They let the data tell them what really works instead of following their intuition.

This is A/B testing. And if you are not doing it in an organized manner, you are basically throwing darts in the dark while your rivals are using a laser pointer.

The True Cost of Guessing

Let's not fool ourselves about what actually happens when you guess in your landing page choices.

You think the color of the button is the matter, so you change it from blue to red because you read somewhere that red converts better. You do your launch. After three weeks, you find out that it actually hurt conversions by 2%. But since you have already moved on, you never find out that that lost 2% could have been real money in your pocket.

You change the headline of your page because you personally like it better than the old one. It's more professional, more your brand, you say. You don't test it. You just launch it. Conversions actually drop, but you take it as "lower traffic that week" and don't question your decision.

You implement social proof notifications because your competitor has them. You do not evaluate the effect. You just assume it's helping because it looks nice. Perhaps it is helping. Maybe it is hurting because the notifications are diverting the attention of the people. You will never find out.

This is happening at every stage of your funnel. Every single element—headline, button text, button color, form fields, image choice, value proposition wording, CTA placement—is a decision that you are making out of a hunch rather than knowing from data.

When guessing tens of variables, the chance of actually optimizing for conversions reduces to a very low level. You are not making your funnel better. You are merely switching things randomly and hoping that something will work.

What A/B Testing Actually Is (And Why It Matters)

A/B testing is by far the easiest and the most effective tool on your hands. You have two versions of something—one the original and the other a variant. Version A is shown to 50% of your visitors while the remaining 50% get to see version B. The conversion that is higher is measured. Whichever becomes the winner is your new starting point.

There is no more to it. But the influence is huge.

Instead of doubting your headline, you know. Instead of speculating whether your CTA button should say "Start Free Trial" or "Get Started," you have the evidence. You discontinue the guessing game and enter the stage of making the decisions educated.

The greatest strength of A/B testing is that it grows from one test to another. You test your headline, you get 15% more conversions. You test your button color, you get 8% more. You test your form fields, you get 12% more. These achievement accumulate with one another.

What turned out to be essentially a 2% conversion rate can be increased to 4%, 5%, or even 6% just by systematically testing and consistently achieving small improvements.

Founders who adopt the method of A/B testing do not reach the point of a one magic change that doubles conversions by guessing. They achieve this position by executing 20 tests, getting 15 of them successful with small 5-15% improvements each. Those grow exponentially into enormous gains over months.

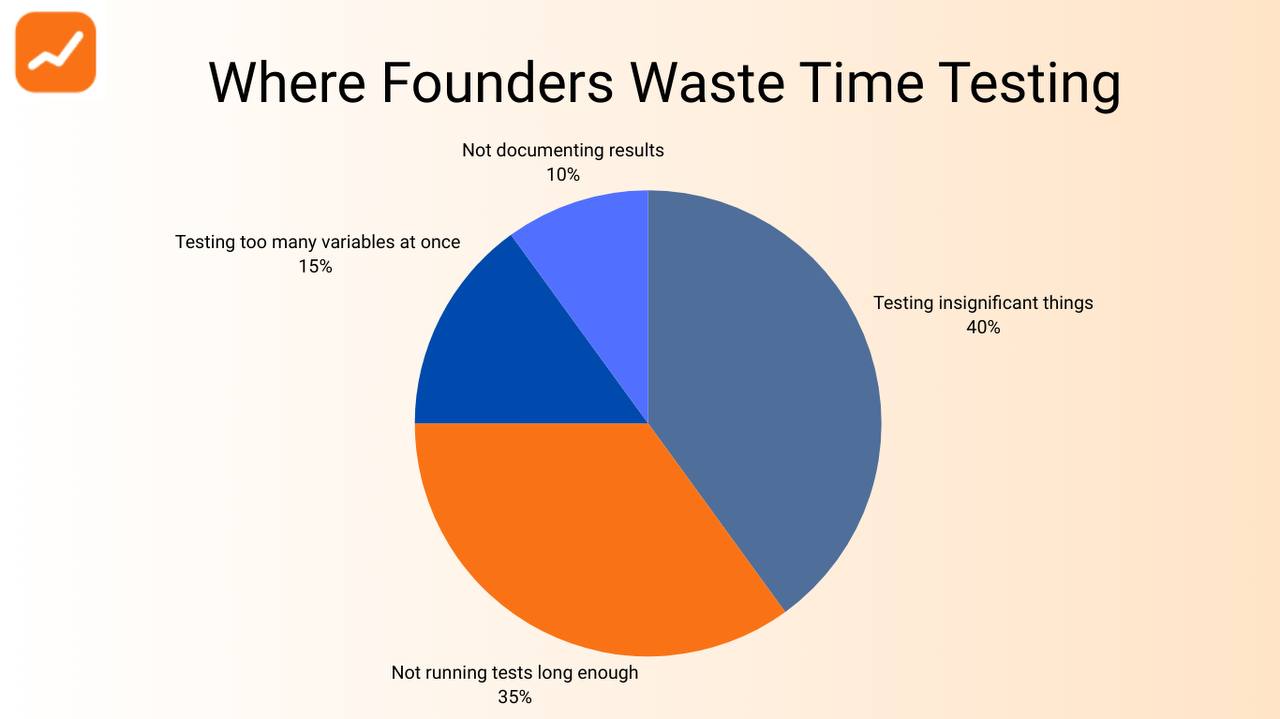

The A/B Testing Mistakes That Kill Your Results

Most founders fail to A/B test in their own ways which are predictable. By knowing these mistakes you will be saved from spinning your wheels and wasting months in conducting tests.

Mistake #1: Not Running Tests Long Enough

Test on Monday is what you did. On Wednesday, by 10% version B is winning. You get so excited that you immediately declare a winner. You flip the switch. But, the following week, the numbers go back to normal and version A was in fact better. What happened?

It is statistical significance. You didn't execute the test for long enough to figure out that the results were actual and not just random. In fact, you need more visitors and more conversions if you want to be certain of the winner.

The rule of thumb: keep conducting your experiment until versions have at least 100 conversions each or at least two weeks. In fact, it would be even better if you can show 95% of statistical confidence before you make a winner announcement.

Mistake #2: Testing Too Many Things at Once

You are changing the headline, button color, form fields, and image all at once. Version B converts better. Awesome! But can you tell which change actually led to the result? Was it the headline? The button? The image? You really have no clue. So you don't know what to keep, what to discard, or what to test next.

One should always test one variable at a time. Change the headline and do not change anything else. After you find out whether the headline changing works, then test the button. This is the only way to figure out the driving conversions.

Mistake #3: Testing Insignificant Things

You spent two weeks trying to figure out whether your CTA button should have 5px or 8px of padding. From a statistical point of view, it doesn't make any difference. You are wasting your time on things that won't significantly increase your conversions.

Determine the variables that have the greatest effect on your testing such as: headlines, value propositions, CTA copy, form length, images, and pricing presentation. These are the elements that really make the difference in the number of conversions. Test them relentlessly. The micro-optimizations can be done later.

Mistake #4: Giving Up After Just One Win

Experiment with a new headline. It is a winner by 12%. You execute it and feel fantastic. After that, you cease testing. Because you are satisfied, you don't test the next idea.

This is the point where most founders stop their growth. One win is not a strategy. It's just a chance.

The founders who keep growing continuously test. They're always conducting experiments. They win some, they lose some, but they keep going.

Mistake #5: Ignoring the Importance of Recording Your Results

You perform 10 tests. You remember that test #3 was the winner, but you can't remember the details. Was it the change in the headline or something else?

You don't have any notes. Now you're testing the same things again without knowing that you've already tested them, thus you waste your time.

Make sure you document everything. Writing down what you tested, what changed, what the results were, and what you learned is very important. This turns into your testing knowledge base. It saves you from experiments conducted on the ideas you've already tested, and it empowers you to recognize the patterns that really work for your audience.

The Framework That Actually Works

So, this is the method of your A/B experiments which will give you real outcomes:

Step 1: Identify Your Biggest Leak

Check your conversion funnel. At which point are you losing the largest number of visitors? Is it at the headline? The pricing section? The form? Only start there. Try out the thing that is taking the most of your money.

Step 2: Form a Hypothesis

Do not just test something randomly without any reason. Have a reason. The hypothesis of your experiment can be: "Our headline is too general. If we make it more specific to our target customer, we'll get more people to sign up." Or: "Our CTA button reads 'Start Free Trial,' but maybe people will respond better to 'Try Risk-Free for 14 Days'."

Every test should have a reason.

Step 3: Design Your Variant

Alter one aspect. Only one. Do not change anything else. If you are testing the headline, change the headline. Don't change the image too. Don't change the button color. Change one thing.

Step 4: Run the Test

Divide your traffic 50/50. Half of your visitors will see Version A. The other half will see Version B. Run the experiment until you have enough data—100 conversions per version at least, a 2+ weeks period is preferable.

Step 5: Measure and Declare a Winner

Your conversion rate per each version is what you need to check. Which one is higher? By how much? Is the difference statistically significant? If version B is better by 10% or more, then you have a winner. So, put it into effect.

Step 6: Document and Repeat

Document your testing, what changed, and what you learned. Then, choose your next biggest leak and start again.

What to Test First (The High-Impact Variables)

If you are just starting with A/B testing, these are the elements that usually make the biggest difference:

Headline variations: Specific vs. generic. Benefit-focused vs. feature-focused. Question format vs. statement format.

CTA copy: "Start Free Trial" vs. "Get Started" vs. "Try for Free" vs. "Create Account."

Form length: How many fields do people actually need to fill out? Can you remove fields and still convert?

Value proposition: Are you leading with the right benefit? Should you emphasize speed, ease, results, or something else?

Social proof: Does showing real customer testimonials, logos, or signup notifications increase conversions?

Pricing presentation: How you show your price matters. $49/month vs. $588/year. Monthly vs. annual toggle.

Begin with these. They are guaranteed to move conversions.

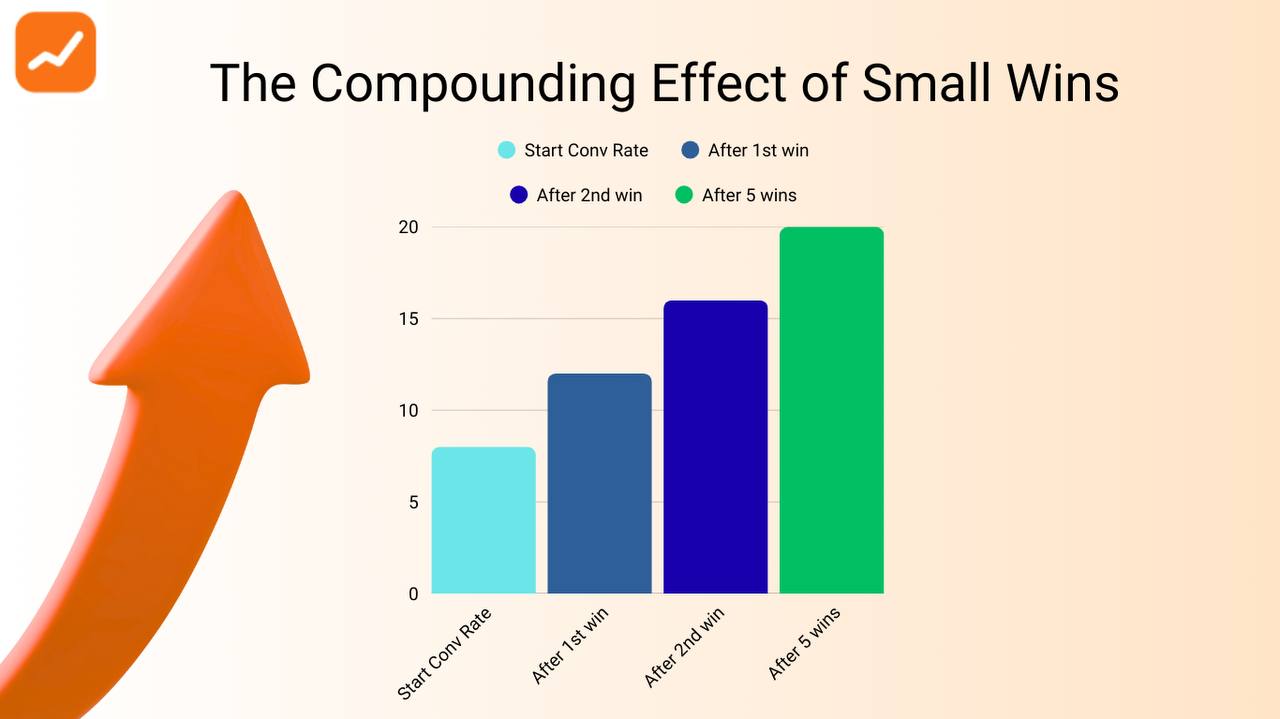

The Compounding Effect of Small Wins

Most founders are totally off the mark when they think about A/B testing in this way: the power is not in one huge test that doubles conversions. The power is in doing 20 tests, succeeding 15 of them with small 5-10% improvements each.

Imagine you have a conversion rate of 2%. You run 20 tests. You get 15 winners with an average 8% improvement per win. That means a multiplier of 1.08 each time.

1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 x 1.08 = 3.17x

Your 2% conversion rate turns into 6.3%. Traffic remains the same. Product stays the same. Just better decision-making based on data rather than guesses.

That is the way top founders operate to increase their business. They are not discovering magic bullets. They are continuously testing, learning, and compounding small wins into huge gains.

Beginning with A/B Testing

There is no need for fancy tools or a specialist to be hired. With basic tools like Google Optimize, the native testing feature of your landing page builder, or simple traffic splitting, you can start A/B testing today.

Choose an element of your page that you want to test. Create a hypothesis about what might work better. Develop your variant. The testing should be done for at least two weeks. Analyze your data. Use the winning version. Write it down. Your next test will be.

That's all there is to it. That's the whole process.

The difference between founders who make decisions based on data and those who make decisions based on guesses is enormous. One group is continuously increasing their conversion rates. The other group is going nowhere, by changing things randomly, and not knowing why conversions go up or down.

The Path Forward

Conversion optimization is not magic. It is not about discovering the perfect headline or the perfect button color. It is about doing systematic tests, learning from data, and gradually making small improvements over time.

Each founder has a leak in their conversion funnel. Every website has points of friction where visitors are leaving. A/B testing is the way to locate those points and fix them without making guesses.

Start small. Test only one thing. Receive your answer. Apply it. Proceed to the next test. Continue compounding.

The best time to start A/B testing was several months ago when you were making guesses. The second best time is today.

Stop guessing. Start testing. Allow the data to determine what really works.

Resources, that you may find useful:

1. https://getrevdock.com/blog/why-your-visitors-leave-without-buying

2. https://getrevdock.com/blog/how-to-make-more-money-from-the-same-website-traffic